2021-2022

ERSP 2021-2022 Projects

In 2021-2022, we had a cohort of 28 scholars from the Computer Science and Electrical Engineering departments: 17 Computer Science students and 11 Electrical and Computer Engineering students. The ERSP scholars worked on a research project in teams of 3-4 students along with a faculty member and/or graduate student in the College of Engineering. Below you can find more information about their projects.

Learning from Robots

Scholars: Haeun Kim, Ravel Valdez, Seema Khan, Rose Maxime

Mentors: Dr. Joseph Michaelis (CS)

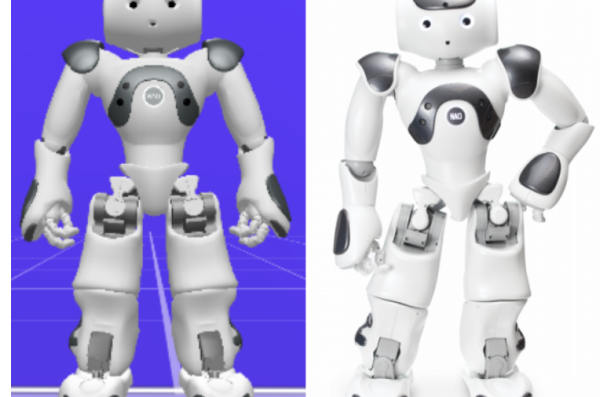

The use of technology in schools and classrooms is becoming increasingly prevalent from computers to different learning softwares in addition to traditional teaching methods. The onset of the Covid pandemic in addition has made the transition to learning using online tools more rapid. In order to test the effectiveness of learning using an online tool such as a virtual robot when compared to a physical robot we are testing the impact a virtual robot will have on teaching college students with little understanding of geometry concepts. This study is going to involve testing human participants through both a virtual robot and a physical robot. Additionally, qualitative data analysis will be conducted using Atlas.ti software.

Tongue-Mobile

Scholars: Lisset Rico, Andrew Gascon, Mariyam Haji, Akhila Ekkurthi

Mentors: Dr. Hannaneh Esmailbeigi (BioE), Dr. Joseph Hummel (CS), Marius Zavistanavicius (ECE)

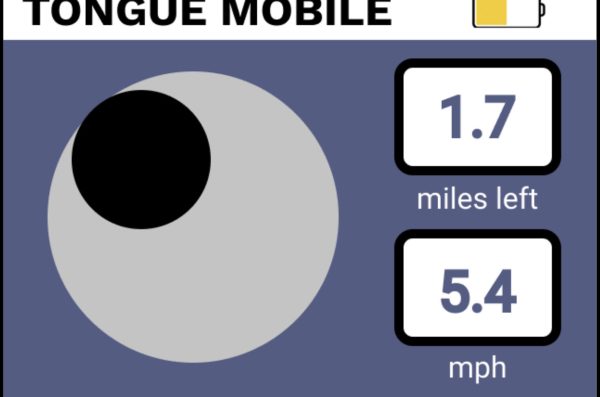

While new wheelchairs have been created to accommodate for limited mobility, some chairs restrict a user’s mobility more or have uncomfortable devices that distract the user while in the chair. Creating a wheelchair that could accommodate various different disabilities could lead to the user requiring little to no assistance while in the wheelchair. We collaborated with the UIC WTSE Laboratory to further advance their solution to this matter. The Tongue-Mobile. This project is composed of an intra-oral device the user wears in their mouth to control a wheelchair that also comes with a display screen. It is a re-imagined design of the electric joystick wheelchair. The intra-oral device has a touchpad embedded in the retainer that is connected to the chair to receive commands. Our team has focused our efforts on the additional feature, the display screen. This will show the user what kind of movement they are making the wheelchair do and provide other kinds of information like the battery percentage left on the chair as well as how many miles they have left before the chair needs to be recharged.

Rectennas Harvesting Efficiency in 5G Bands

Scholars: Andres Dimas Jr., Adam Mukahhal, Eric Bugarin

Mentor: Dr. Pai-Yen Chen (ECE), Trung Ha (ECE), Xuecong Nie (ECE)

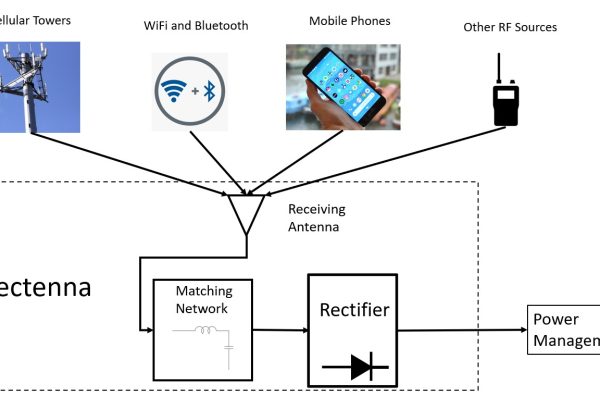

The growing number of Internet of Things devices has created the need to power them without batteries. Due to its higher radiated power density and increase in use, 5G has pushed the idea of harvesting radio frequency (RF) signals using rectifying antennas (or rectennas). Our group will investigate invisible (optically-transparent) energy harvesters that can collect and convert ambient RF signals into useful electricity. One of the main goals of the project is to improve the RF to DC power conversion efficiency of the rectenna system. Other improvements that can be made to the system that can improve the power conversion efficiency include increasing the angular coverage and gain of the antenna. Our group worked with Dr. Chen’s PhD students on design, optimization and testing of rectennas for the 5G band. We helped to optimize the dimensions for the PhD students’ antenna design. Our team has developed a bruce array antenna which targets 1GHz as the resonant frequency, as this frequency can reduce the size of the antenna and also works at 5G mid-band. Our team has also developed a rectifier to work with the antenna.

Wireless Charging Nest

Scholars: Halima M. Muthana, Daniel Gonzalez, Jenson L. Keller, Rawan T. Hussein

Mentor: Dr. Dieff Vital (ECE)

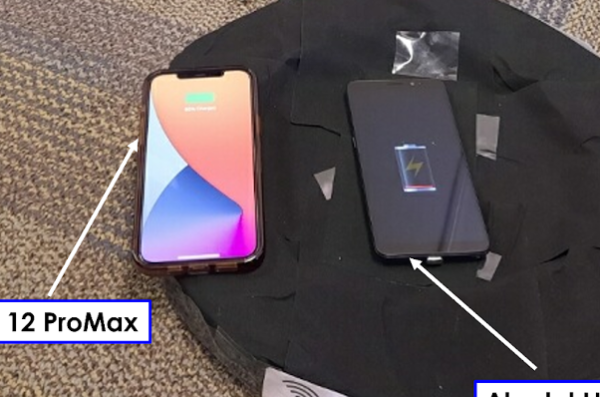

In this project, we demonstrate a wireless charging solution capable of charging multiple devices and can be put on office desks, dining tables, and cars to allow fast charging for families with kids going on road trips. We built a wireless charging nest made of Qi transmitters and receivers that was able to charge multiple devices such as smartphones and watches, as shown in the figure below. We introduced a new relay topology that allows for extended transmitting capability where more than one device can be charged from a single transmitter. For that, we were able to use multi-coil transmitting circuits to transfer power to a receiving circuit embedded in the back of the phone or the smart watch. In this case a phone, and with the help of relays we were able to further distribute the power to leave no empty spaces within the nest. With further development and optimization to the current charging nest, phones and drones can be charged efficiently and farther away from the transmitting sources.

Cheating Detection and Prevention

Scholars: Leeza Andryushchenko, Dua’a Hussein, Agne Nakvosaite, Sharva Darpan Thakur

Mentor: Dr. Joseph Hummel (CS)

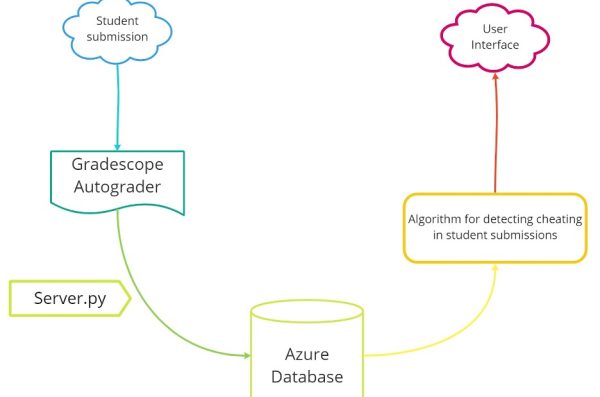

Our team worked on an extension tool that builds on top of gradescope autograder and serves as an additional cheating detecting algorithm. The process is as follows: when a student submits their work on gradescope, the autograder automatically runs our own database writing script file “server.py” which records each submission, score, and time into a cloud database on Azure (database storage belongs to professor Hummel who is coordinating our research). After the deadline passes, instructors can interact with another program (labeled as “user interface” on the diagram) that will fetch the data from the database and output statistics about submissions for the given assignment. One of the red flags algorithm is looking for is a drastic increase in student’s score when the submissions are only a short period of time between each other. For example, a student gets 0/100 on their submission but after a couple of minutes resubmits a completely different file and gets a full score. Since it is very unlikely that the student can write a whole new submission in just a couple of minutes, it is reasonable to assume there could’ve been a cheating case involved.

Haptic-Ostensive Action Recognition

Scholars: Ariadna Fernandez, Ayushi Tripathi, Omar Amer, Razi Ghauri

Mentors: Dr. Miloš Žefran (ECE), Ava Mehri Shrevedani

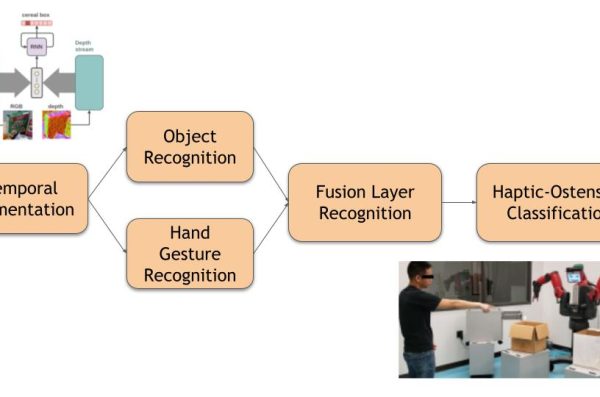

The project consists of implementing a Haptic-Ostensive action recognition model that can be used by an assisting social robot. Assisting robots need to be able to perform collaborative tasks and therefore need to be aware of objects in their environment for more efficient interactions with humans. For the robot to have efficient interactions with humans, it needs to be able to recognize Haptic-Ostensive actions, which involve the manipulation of objects in the surrounding environment. Technologies such as RGB-D cameras, which collect an extra layer of depth, are helpful in providing a better representation of surrounding space, objects and human actions. Using RGB-D frames of video footage of people performing Haptic-Ostensive actions, we are training an algorithm that is able to classify these actions. The two main parts of the recognition algorithm are the object recognition part which uses a recurrent convolutional neural network, and the second part of the hand gesture recognition algorithm that uses a two-stream convolutional neural network. This model takes segmented data as input, registers it as hand and object position data, and processes it all together in order to aid in the classification of the components of the robot’s environment.